In a strategic move to maintain its dominance in the fiercely competitive artificial intelligence (AI) hardware sector, NVIDIA Corporation has announced the debut of its groundbreaking AI chip to reduce inference costs. This pivotal step by NVIDIA comes amidst intensifying rivalry from industry giants such as Google, Amazon, and AMD.

The tech giant specialises in graphics processing units, which are currently the preferred chips for LLMs that train generative AI software like OpenAI’s GPT and Google’s Bard. However, Nvidia’s chips are short in supply as startups, tech giants and cloud providers struggle to get GPUs to train their AI models.

The new AI chip, GH 200 Grace Hopper, uses the same GPU capability as Nvidia’s highest-end artificial intelligence chip, H100. However, GH200 combines that GPU capability with 141 GB of advanced memory and a 72-core ARM main processor.

GH200 will reduce development costs

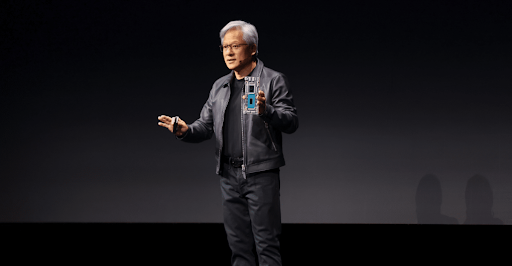

CEO Jensen Huang at a conference held on Tuesday said the AI chip would help to scale out of the data centres of the world. He said, “We’re giving this processor a boost.”

Typically, all AI models require training and inference. The model is initially trained with a large amount of data and requires thousands of General Processing Units. Then the AI model is used to generate content through a process called inference.Inference, unlike training, takes place almost constantly and requires large processing power to run the software.

Huang said, “You can take pretty much any LLM you want and put it in this and it will infer like crazy.” This will ultimately cut down the inference expenses of LLMs.

NVIDIA created GH200 for inference since it has a large memory capacity, providing larger artificial intelligence models to match a single system. The new chip has a 141 GB of memory size, a huge increase from the 80 GB of H100.

“Graphics and artificial intelligence are inseparable, graphics needs AI, and AI needs graphics”

NVIDIA CEO Jensen Huang on its updated GH 200 Grace Hopper Superchip

When will GH200 be available?

Enthusiasts and professionals can anticipate obtaining the AI chip through the company’s authorized distributors during the second quarter of 2024, with sample availability anticipated by the close of 2023. As for the price point, NVIDIA has opted not to disclose these details at this juncture.

This unveiling follows in the wake of a comparable move by NVIDIA’s rival, AMD, which introduced its own AI-focused chip named MI300X, showcasing a substantial memory size of 192 GB. AMD is actively marketing the MI300X for its capacity to effectively support AI inference, setting the stage for a compelling contest between industry giants.